With the rise of Chat GPT4 and large language models, it is clear that we have entered the age of Artificial Intelligence. The information revolution is here to stay. The question now is, how are we going to use AI in a way that is ethical, moral, and sustainable? How can we use AI to improve our work while ensuring human connection, authenticity, and dignity? How can AI open up vast possibilities for preserving and sharing the Dharma with a world deeply in need of the Buddha's teachings of compassion, insight, and interdependence?

We are exploring how AI can help to solve problems and challenges for Buddhist digital humanities, and particularly for libraries and archives. In our previous article, our developer Élie Roux describes a novel approach to an old problem, the problem of cataloging (in our case, Buddhist texts). To continue along this theme, we are happy to announce the creation of an exciting new tool to crop and process images using AI, which up until now has been a laborious and manual process. Please read Élie Roux's article for all the details.

Training AI to Crop Manuscripts

Élie Roux

Can Artificial Intelligence help BDRC crop images more efficiently? Is this true or is it a SCAM? Why not both!

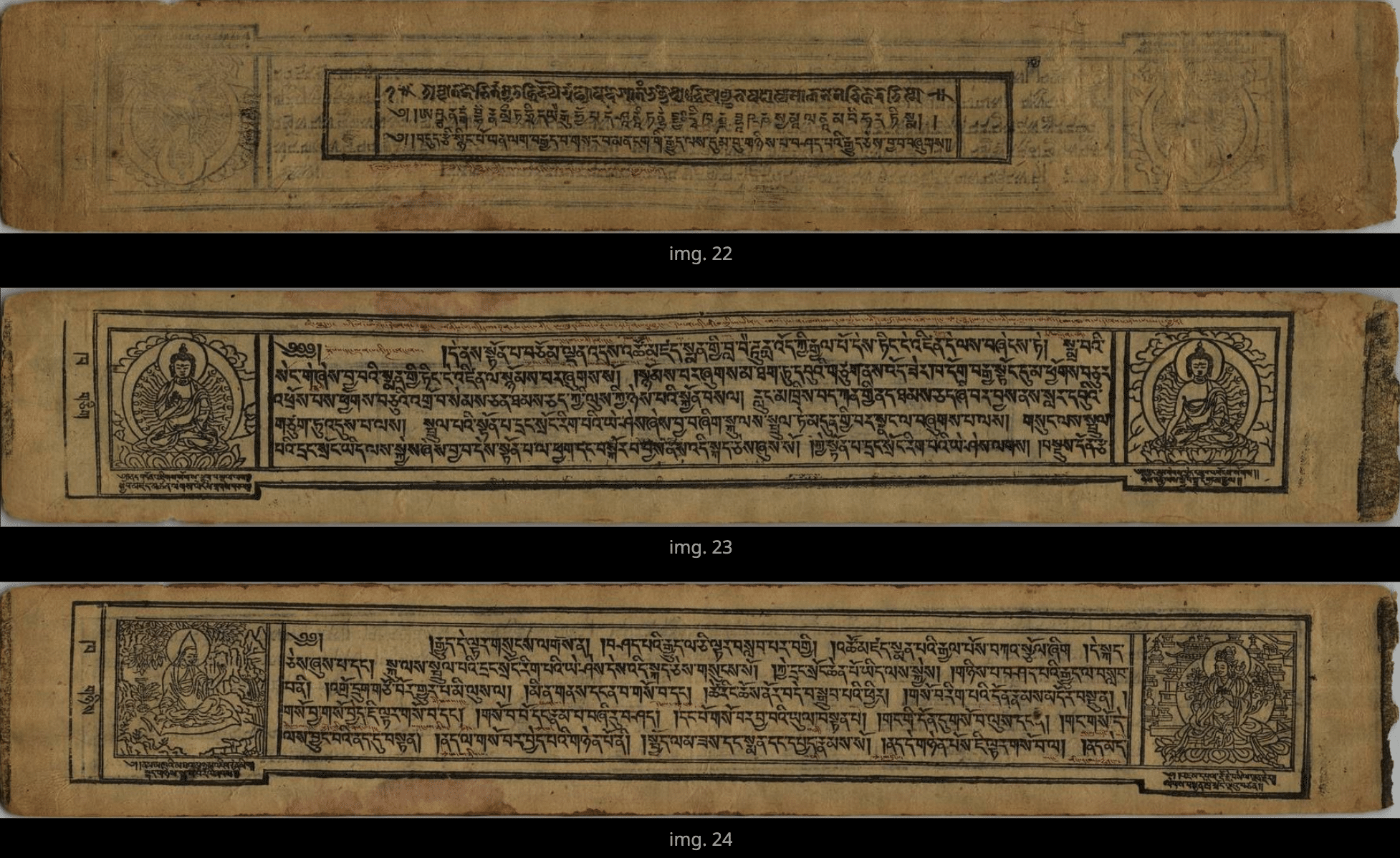

In our archive, BDRC has historically presented each text so that each image of a text contained only one page. When users read Buddhist texts on our site, they see a display of one page at a time which shows very little of the physical background surrounding the text when we scanned it, as can be seen below.

Readers find our texts easy to read and reprint because of the care we've always put into 'photoshopping' the scans, all 35 million pages of them. Arranging each text page like this, within a single image, means that the pages are easier to reorganize, reprint, and to OCR, but does have the downside of requiring special processing of each image.

BDRC's images come from a variety of sources, operating in different environments. On one end of the spectrum, there is a professional scanning operation like at the National Library of Mongolia. As shown in the photo below, this NLM scanning operation is controlled, with image processing done on site, and is easily automated.

A professional scanning operation at the National Library of Mongolia

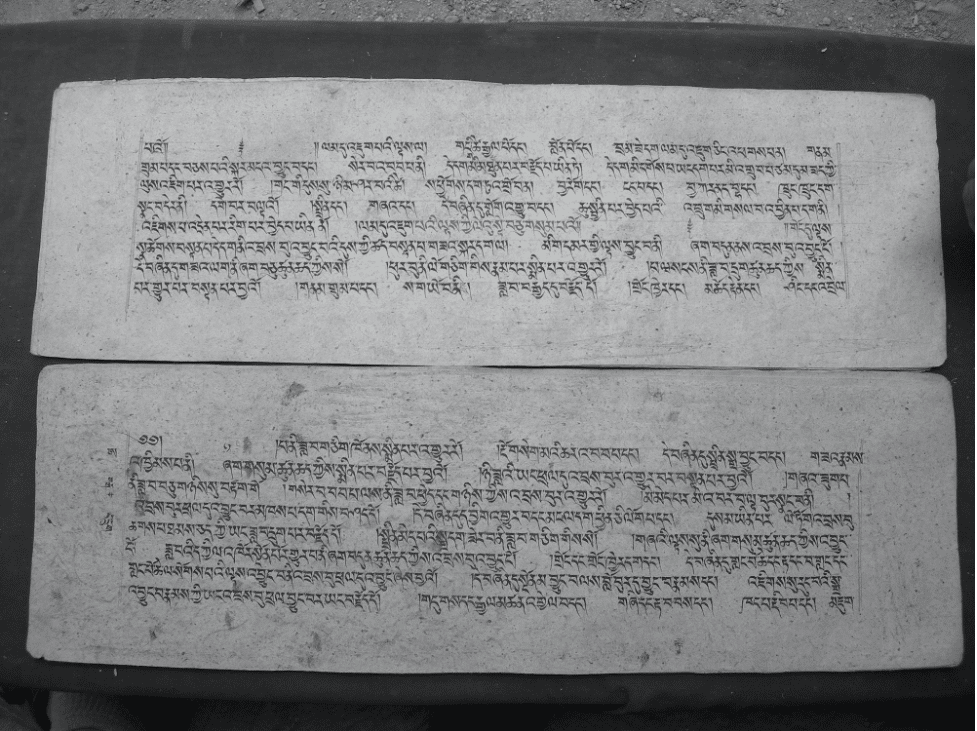

Along the spectrum we can also find images like those taken by the Tibetan Manuscripts Project Vienna (TMPV) on field trips in India (see our article on TMPV here and browse their collection in our archive), resulting in images containing 2 page sides of a pecha (image), and too irregular to be cut at a constant position.

Bruno Lainé & Géza Bethlenfalvy on an image acquisition trip in Ladakh for Tibetan Manuscripts Project Vienna

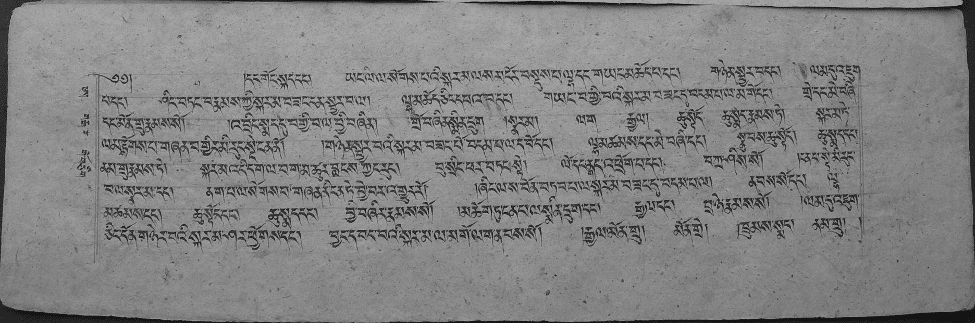

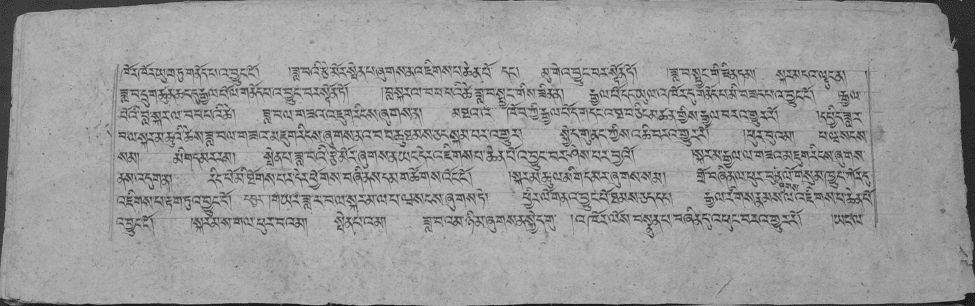

Text Scan 2. Example of an image taken at Basgo monastery by TMPV

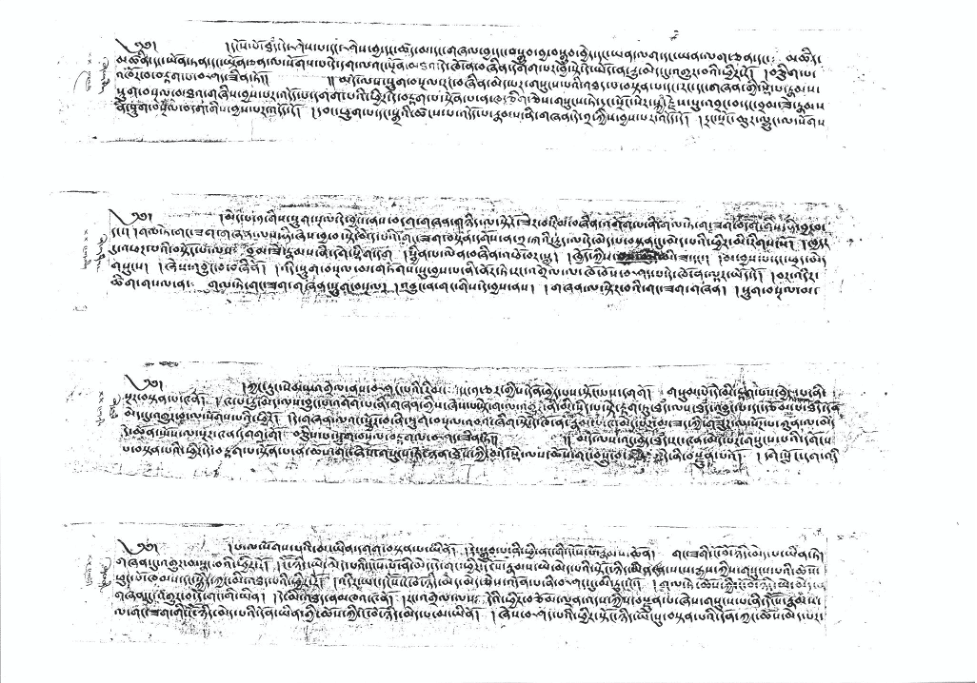

At the other end of the spectrum are images scanned from microfilms or photocopies where page boundaries are not always visible and where pages are positioned in a different place on each image:

Text Scan 3. Image of a photocopy of a microfilm contributed to BDRC

Processing these tens of thousands of images to fit them to BDRC standards is a task that is very difficult to automate and has consistently consumed a lot of human resources. BDRC has often had an intimidating backlog of images that require processing, and this has been one of the bottlenecks of the organizations.

But automation is one of the promises of AI (for better or worse). And we thought, perhaps AI can help us automate the difficult process of image cropping! One of the major developments in Computer Vision (AI for images) in the last few months has been the release by Meta of the Segment Anything Model (or SAM, see announcement). SAM is an open source AI model that aims at detecting all the elements in an image and is trained on an extremely impressive dataset (1 billion manual annotations on 10 million images!).

As soon as the release of SAM was announced, BDRC started to test it on some of our images, with very promising results. We then took our experiment a step further and built the prototype of a tool to extract manuscript pages from the images. Called the Segment and Crop Anything Model (SCAM), we have made the tool freely available to the public by openly sharing the code on Github under a free license.

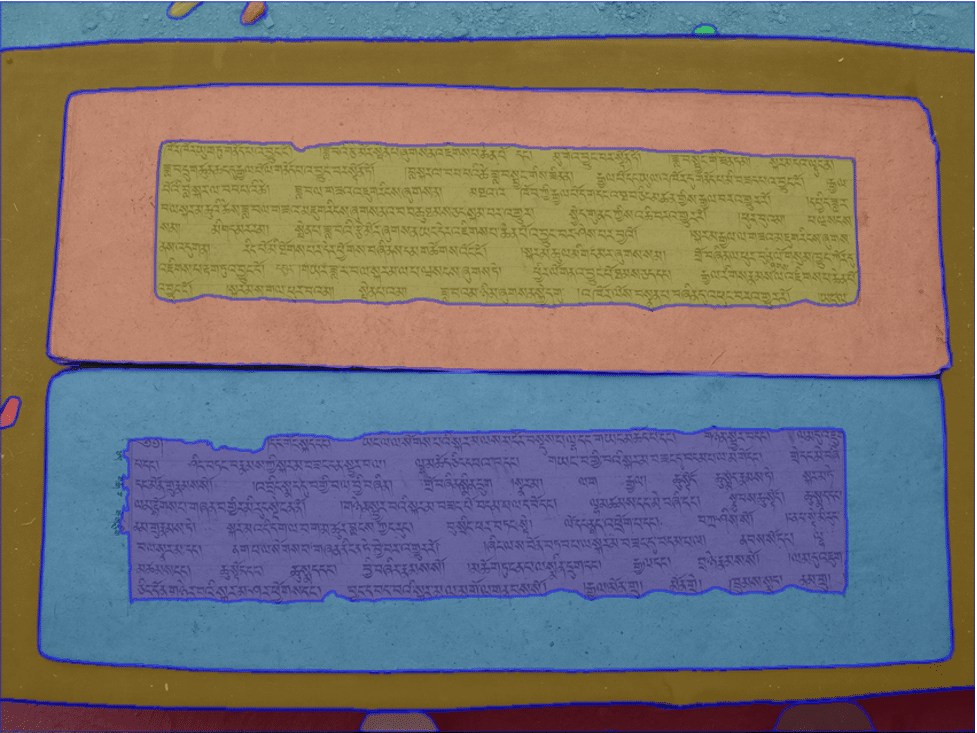

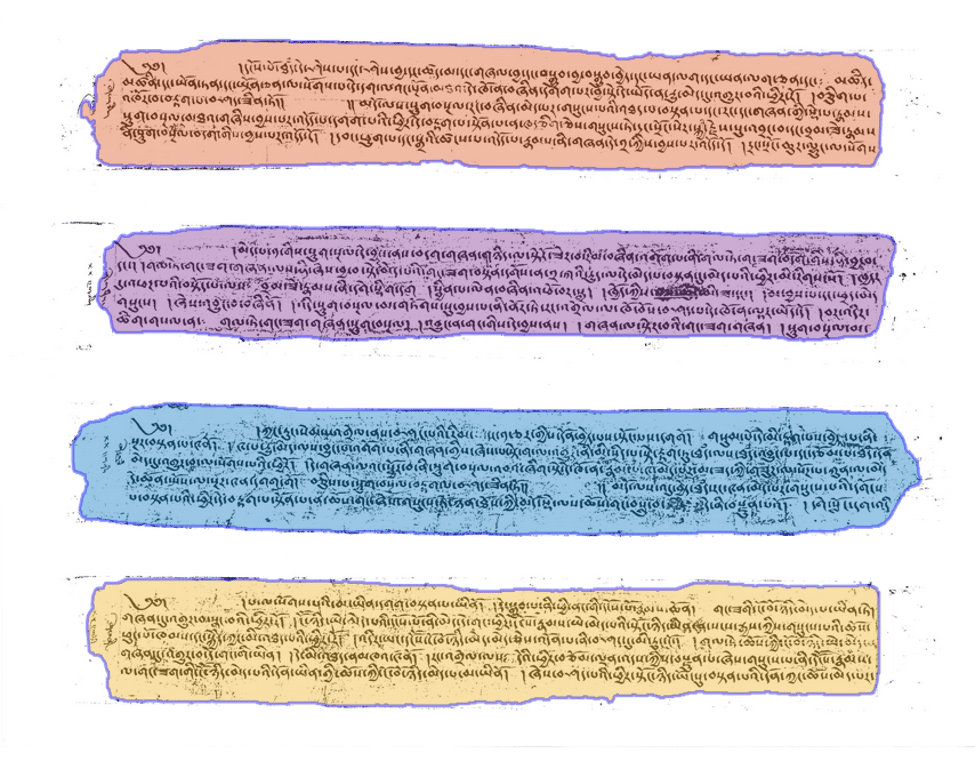

One of the main actions of the tool is to select the pages among the different annotations returned by SAM. Let's use the image from Basgo as an example:

Text Scan 4

While it's very easy for a human to intuit which annotations (colors) are pages and which are not, it is unfortunately not a straightforward task for a machine, and we had to implement a set of heuristics based on, for instance:

- Squarishness (the area must look like a rectangle)

- Minimum area of the image (we don't select small annotations)

- Expected number of pages

- Aspect ratio range (the rectangle must have a certain shape)

- Must not touch certain borders of the image (typically top and bottom)

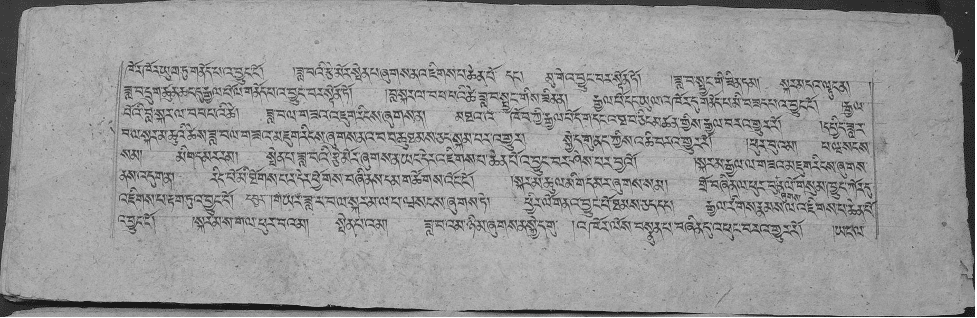

After applying these heuristics and extracting the pages, we automatically get these two images:

Text Scan 5

Text Scan 6

Which are close to perfect! The tool even implements an option to rotate the images. Using this option on the first image of our example makes it look more straight, at the expense of a little blurring (which is why it was not applied on this set):

Text Scan 7

After some testing, the tool is viable and performs at almost 100% on easy cases. For more difficult images the accuracy goes down a little (97%), and accuracy goes down more significantly on very difficult cases. For instance, on the example above from Text Scan 3, SAM finds the following annotations:

While these are quite reasonable, they would result in suboptimal images that are cropped too tightly around the text.

BDRC has already started to use the tool and you can see some of the first results online in the archive on this old tantra text. These required very little manual intervention and the rotation was even applied, resulting in excellent results.

We are delighted to be able to use AI to crop and process text images more efficiently, which will allow us to serve our readers better. Thousands of volumes, which might have languished in our backlog for years, will now be processed in a much faster and easier manner. You can expect hundreds of more volumes online soon to browse and read!

Sorry, the comment form is closed at this time.